Brussels, BE – July 10, 2025, the European Union doubled down on its Artificial Intelligence Act (AI Act), rolling out a critical General-Purpose AI (GPAI) Code of Practice to guide tech giants like Google, Microsoft, Meta, and OpenAI toward compliance with the bloc’s landmark AI regulations¹. This voluntary code, detailed across nine pages, emphasizes transparency, copyright protection, and safety for AI models like ChatGPT and Gemini, requiring companies to disclose data sources, training processes, and energy consumption¹. Despite heavy lobbying from over 100 tech firms, including Alphabet and Meta, for a two-year delay due to high compliance costs and vague rules, the EU rejected calls to pause, with GPAI provisions set to take effect August 2, 2025². While the initial outlook signaled a tough road for Big Tech and a shifting experience for end users, recent signals from Brussels suggest a growing awareness of the need for a more balanced regulatory environment.

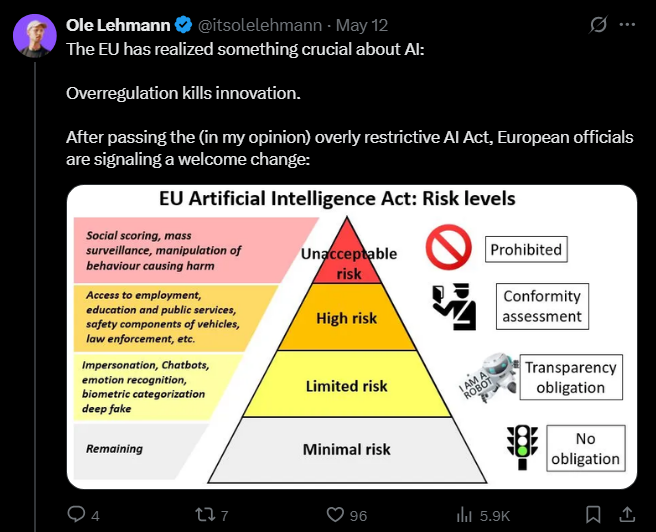

The AI Act, effective since August 1, 2024, stands as the world’s first comprehensive AI regulation, designed to ensure safe, transparent, and ethical AI systems across the EU’s 450-million-consumer market³. It categorizes AI into risk tiers: unacceptable, high, limited, and minimal, with strict rules for high-risk systems (e.g., hiring, credit scoring, law enforcement) and GPAI models³. High-risk systems face rigorous requirements like risk assessments and human oversight by August 2026, while GPAI providers must comply with transparency and copyright rules by August 2025³. Non-compliance could mean substantial fines, up to €35 million or 7% of global turnover, compelling Big Tech to adapt³.

For platforms like Meta (Instagram, WhatsApp), Google (Gemini, Search), and Amazon (AWS), the AI Act initially appeared as a seismic regulatory event¹. Compliance costs, estimated in the billions, include hiring auditors, upgrading datasets, and building monitoring systems¹. These significant overheads were feared to potentially raise prices for AI-driven services, like Microsoft’s Copilot or Google’s AI-enhanced tools¹. Early concerns even suggested that platforms might limit free-tier offerings in the EU to circumvent compliance burdens, potentially leaving users with pricier or less feature-rich services.

End users faced a potential trade-off. While the AI Act’s transparency rules, such as labeling AI-generated content (e.g., deepfakes, chatbots), aim to build trust in sensitive contexts like job applications or loan decisions³, stricter rules could have led to “EU-light” platform versions, stripping features like personalized ads or advanced chatbots, leaving EU users with a downgraded experience compared to other regions⁴.

However, recent developments indicate a pivotal shift in the EU’s approach. Recognizing that overregulation can stifle innovation, European officials are signaling a welcome change in mindset. As Tech Commissioner Henna Virkkunen has underscored, Europe cannot afford to be left behind while tied up in regulatory red tape, emphasizing the need for an environment that is “faster and simpler” for AI investments⁴.

Brussels appears to be finally listening to the tech industry. In February, the EU reportedly scrapped overly strict AI liability rules, followed by beginning industry consultations on pain points in March, and a new strategy in April focused on reducing “administrative burden”⁴. This recalibration is viewed as a sign of smart, adaptive policymaking, aiming to attract more investment capital, keep European talent from fleeing, enable faster product development, and create more competitive AI companies⁴. The EU appears to be learning from past mistakes, acknowledging that an excessively strict stance could lead to losing the AI race to the US and China, while a more balanced approach nurtures homegrown innovation⁴. This could be transformative for European startups, allowing them to focus on building breakthrough AI applications instead of drowning in compliance costs⁴.

Outlook: Cautious Optimism for Europe’s AI Future

Big Tech still faces a substantial compliance sprint as the August 2025 deadline nears. However, the narrative is evolving from one of unyielding regulatory burden to one of an adaptive framework. While challenges remain, the EU’s recent signals suggest a growing commitment to fostering innovation alongside its foundational goals of safety and ethical AI. As Europe aims to be a player, not just a referee, in the AI revolution, there is cautious optimism that the continent can finally create better conditions for breakthrough AI companies to thrive, potentially leading to a more dynamic, albeit still evolving, AI landscape for users.

Sources:

¹ European Commission. (2025, July 10). General-Purpose AI Code of Practice. Retrieved from https://ec.europa.eu

² Reuters. (2025, July 10). EU Rejects Tech Industry Push to Delay AI Act Compliance Deadlines. Retrieved from [suspicious link removed]

³ European Commission. (2024, August 1). EU Artificial Intelligence Act Enters into Force. Retrieved from https://ec.europa.eu

⁴ Ole Lehmann (@itsolelehmn). (2025, May 12). The EU is FINALLY correcting course on AI regulation. After rushing to pass the “world-first” AI Act in 2023, Brussels is now wisely reconsidering the mess it created [Thread]. X.

Leave a Reply