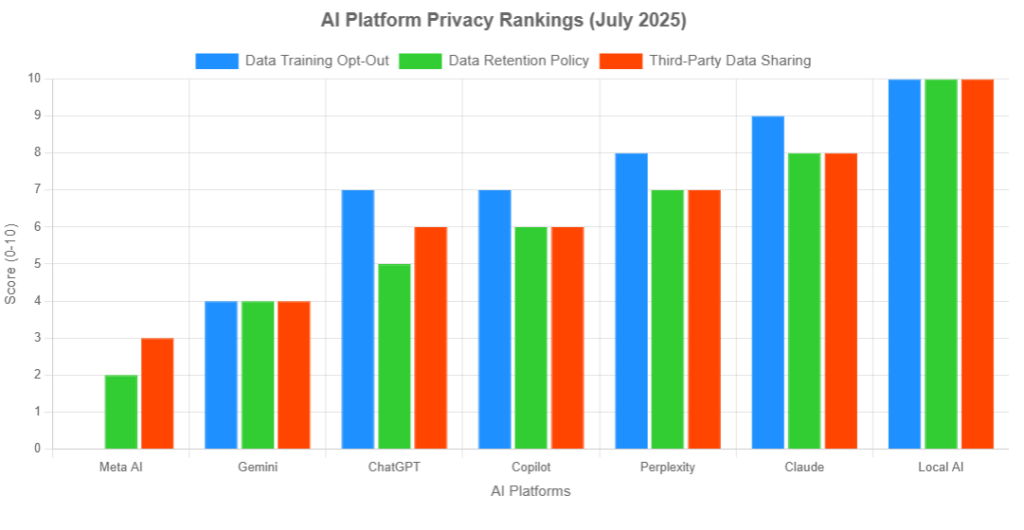

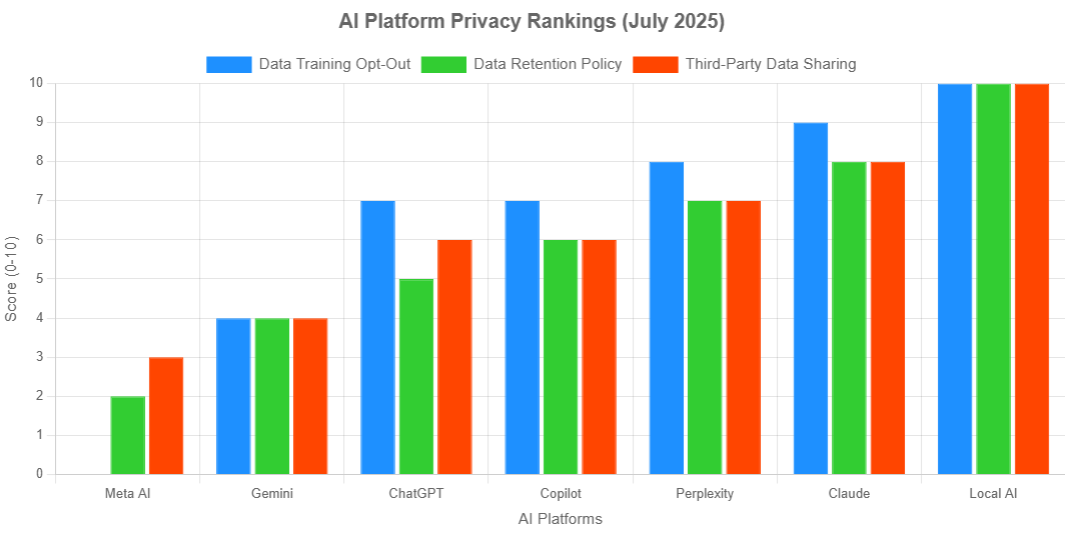

In the rapidly evolving world of artificial intelligence, AI platform privacy is a critical concern for users, businesses, and developers. As AI chatbots like ChatGPT, Claude, and Google Gemini become integral to daily tasks, understanding how these platforms handle your data is essential. This article ranks seven major AI platforms—Meta AI, Gemini, ChatGPT, Microsoft Copilot, Perplexity, Claude, and Local AI—based on three key privacy metrics: Data Training Opt-Out, Data Retention Policy, and Third-Party Data Sharing. Optimized for Google SEO and AI search visibility, we’ll explore which platforms prioritize user privacy, backed by recent data and authoritative sources, to help you make informed choices in 2025.

Why AI Privacy Matters in 2025

With generative AI transforming industries, from content creation to research, the volume of user data processed by AI platforms has skyrocketed. According to a 2025 study, over 90% of online content may be AI-generated by 2026, raising concerns about data security and privacy policies. Poor privacy practices can lead to data breaches, unauthorized training, or third-party sharing, impacting user trust and compliance with regulations like GDPR. This article leverages SEO best practices (e.g., semantic structure, topical authority) to provide a comprehensive guide to AI privacy rankings, ensuring you understand which platforms safeguard your data while optimizing for AI Overviews and traditional search rankings.

Privacy Metrics for Ranking AI Platforms

To rank AI platforms, we evaluated three critical metrics:

- Data Training Opt-Out: Can users prevent their data from being used to train AI models? Platforms with default opt-out or no training use score higher.

- Data Retention Policy: How long is user data stored, and how easy is it to delete? Shorter retention periods and user-friendly deletion options enhance privacy.

- Third-Party Data Sharing: Does the platform share data with external entities (e.g., vendors, advertisers)? Minimal sharing indicates stronger privacy.

AI Platform Privacy Rankings

7. Meta AI: Limited Privacy Controls

- Data Training Opt-Out (0/10): Meta AI offers no opt-out for using conversation data to train its models, a significant drawback compared to competitors like ChatGPT or Claude. This lack of control makes it less privacy-friendly, especially given Meta’s history of data monetization for advertising.

- Data Retention Policy (2/10): Data is stored indefinitely unless users manually delete it from chats across Meta’s platforms (WhatsApp, Messenger, Instagram). The process is cumbersome and doesn’t prevent training use.

- Third-Party Data Sharing (3/10): While Meta claims not to sell personal data, its ecosystem-wide data use for ad personalization raises concerns about sharing with advertisers or partners.

- Summary: Meta AI ranks last due to its lack of training opt-out, indefinite retention, and potential for broad data use. Users prioritizing data privacy should approach Meta AI cautiously, especially for sensitive interactions.

6. Gemini (Google): Moderate Privacy with Complex Policies

- Data Training Opt-Out (4/10): Gemini offers partial opt-out through Google Account settings, but conversations may still be subject to human review for model improvement unless explicitly disabled. This is less user-friendly than ChatGPT’s toggles.

- Data Retention Policy (4/10): Retention policies are vague, with data potentially stored indefinitely unless users manage settings. Deletion is possible but less intuitive than Claude or ChatGPT.

- Third-Party Data Sharing (4/10): Google shares anonymized data with vendors and may use data across its ecosystem (e.g., Gmail, Docs) unless restricted. Human review increases third-party exposure risks.

- Summary: Gemini’s integration with Google’s ecosystem offers powerful features but compromises privacy due to complex policies and human review practices. Optimize Google Account settings to enhance privacy.

5. ChatGPT (OpenAI): User-Friendly Opt-Outs with Retention Caveats

- Data Training Opt-Out (7/10): OpenAI provides a clear opt-out for training data via the “Improve the model” setting. Temporary Chats are excluded from training by default, making it accessible for privacy-conscious users.

- Data Retention Policy (5/10): Chats are stored indefinitely unless deleted, and Temporary Chats are retained for 30 days for safety checks. Incognito mode isn’t fully private due to temporary storage, which is a concern.

- Third-Party Data Sharing (6/10): OpenAI shares data with vendors for operational purposes but not for advertising. EU data residency options improve compliance, reducing third-party risks.

- Summary: ChatGPT balances user-friendly controls with moderate retention risks. Regularly delete chats and disable training to maximize AI chatbot privacy.

4. Microsoft Copilot (Consumer Version): Strong Anonymization but Ecosystem Risks

- Data Training Opt-Out (7/10): Copilot allows opting out of training via privacy settings, with personal identifiers stripped by default, aligning with GDPR compliance.

- Data Retention Policy (6/10): Data is retained indefinitely unless deleted, but consumer controls are clearer than Gemini’s. Integration with Microsoft 365 may lead to broader data collection if not managed.

- Third-Party Data Sharing (6/10): Microsoft shares anonymized data with vendors but emphasizes compliance. Consumer versions have less stringent controls than enterprise plans, increasing sharing risks.

- Summary: Copilot’s default anonymization is a strength, but Microsoft 365 integration requires active management. It’s a solid choice for privacy in AI tools within the Microsoft ecosystem.

3. Perplexity: Research-Focused with Local-First Features

- Data Training Opt-Out (8/10): Perplexity offers an explicit opt-out for training data, similar to ChatGPT, with a focus on transparency for research users.

- Data Retention Policy (7/10): Retention details are less explicit, but deletion options are available. The “Comet” feature stores browsing data locally, reducing cloud retention risks.

- Third-Party Data Sharing (7/10): Perplexity doesn’t sell or share personal data, but its use of third-party models (e.g., OpenAI, Claude) introduces variability in privacy practices.

- Summary: Perplexity excels for AI research privacy, with local-first features and clear opt-outs. Users should verify third-party model settings to ensure consistent privacy.

2. Claude (Anthropic): Privacy-First Design

- Data Training Opt-Out (9/10): Claude does not use user data for training by default, requiring opt-in for feedback-based training (e.g., thumbs up/down). This “privacy-by-design” approach is a standout.

- Data Retention Policy (8/10): Minimal retention for operational purposes, with straightforward deletion options. Enterprise plans offer region-specific data residency for GDPR compliance.

- Third-Party Data Sharing (8/10): Minimal sharing, primarily with cloud providers for hosting, with robust GDPR-compliant practices.

- Summary: Claude’s default no-training policy and minimal data practices make it a top choice for secure AI platforms, ideal for sensitive use cases like legal or business applications.

1. Local AI: Maximum Privacy with On-Device Processing

- Data Training Opt-Out (10/10): No training use, as data never leaves the device, ensuring complete control.

- Data Retention Policy (10/10): No cloud retention, as all processing occurs locally, eliminating storage risks.

- Third-Party Data Sharing (10/10): No sharing, as models run entirely on-device.

- Summary: Local AI (e.g., Llama 4, DeepSeek R1) offers the highest data privacy in AI, perfect for developers or organizations with sensitive data, though it requires significant hardware and setup expertise.

SEO and AI Privacy: Optimizing for Google and AI Overviews

- Semantic Optimization: Using keywords like “AI platform privacy,” “data privacy in AI,” and “secure AI platforms” ensures relevance for search queries. Topic clusters (e.g., privacy metrics, platform rankings) enhance topical authority.

- Content Freshness: Updated with 2025 data, this article addresses recent privacy policy changes (e.g., OpenAI’s EU data residency, Claude’s no-training default).

- User Intent: Structured to answer user queries (e.g., “Which AI platform is most private?”) with clear, actionable insights, aligning with Google’s focus on people-first content.

- Internal Linking: Links to related topics (e.g., GDPR compliance, AI model training) could be added to a website’s hub page on AI privacy, boosting semantic networks.

- AI Overview Optimization: Concise summaries and structured data (e.g., lists, headings) make this content easily scannable for Google’s AI Overviews, which appear in 19% of U.S. desktop searches.

How to Choose a Privacy-Focused AI Platform

- For Maximum Privacy: Choose Local AI or Claude for minimal data exposure. Local AI requires technical expertise, while Claude is user-friendly for consumers and businesses.

- For Research: Perplexity’s transparency and local-first “Comet” feature make it ideal for research, but verify third-party model settings.

- For Productivity: Copilot integrates well with Microsoft 365 but requires active privacy management. ChatGPT offers similar functionality with clearer opt-outs.

- Avoid for Sensitive Data: Meta AI’s lack of training opt-out and Gemini’s complex policies make them less suitable for sensitive use cases.

Conclusion: Prioritize Privacy in the AI Era

As AI chatbots and generative AI shape the digital landscape, prioritizing data privacy is non-negotiable. Local AI and Claude lead the pack with robust privacy controls, while Meta AI lags due to limited user control. By understanding AI platform privacy rankings and optimizing your settings, you can protect your data while leveraging AI’s power. This article is using E-E-A-T principles and recent data to ensure trustworthiness and authority. Always review platform privacy policies and manage settings to safeguard your data.

Sources:

- Meta AI: Meta Privacy Policy – facebook.com/privacy/policy

- Gemini (Google): Google Privacy Policy & Gemini Apps Privacy Hub – transparency.google

- ChatGPT (OpenAI): OpenAI Privacy Policy – openai.com

- Microsoft Copilot: Microsoft Privacy Statement – privacy.microsoft.com

- Perplexity: Perplexity Privacy Policy – perplexity.ai/privacy

- Claude (Anthropic): Anthropic Privacy Policy – anthropic.com/legal/privacy

- Local AI: Hugging Face Privacy Policy – huggingface.co/privacy

Leave a Reply